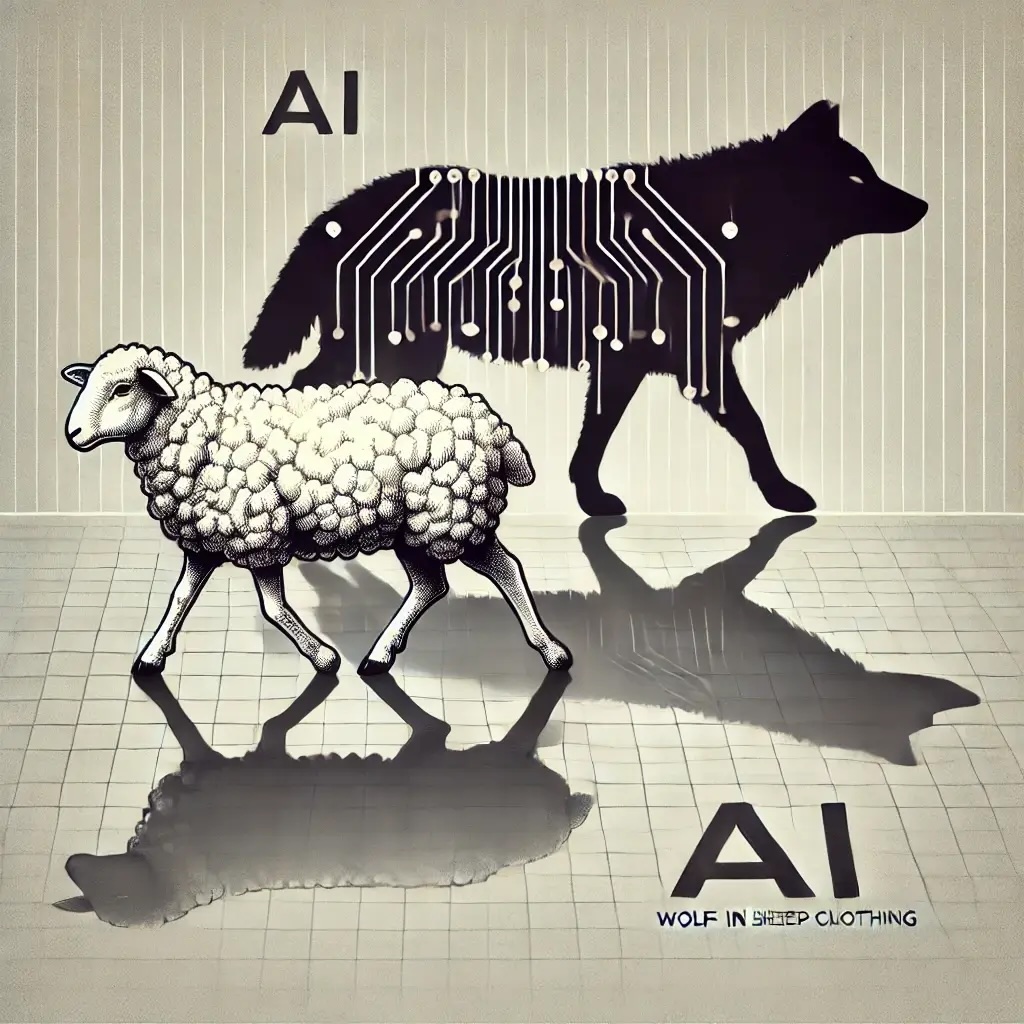

While students and instructors may have enjoyed a summer break, AI certainly did not take time off! It continues to advance rapidly, with innovations like AI-powered podcasts leading the charge. As these developments unfold, higher education remains deeply invested in the intersection of AI use and academic integrity. While AI has the potential to support and enhance learning, it also presents the risk of being misused as a shortcut in the educational process. The challenge of accurately detecting AI-generated content is growing, prompting educators to rethink traditional assignments. To maintain academic rigor, courses may need to evolve, incorporating tasks that are less easily completed by AI and more focused on critical thinking and original analysis. The post serves an introductory guide to detection but also offers insights into how to prevent AI misuse.

To begin the discussion surrounding appropriate AI use in the classroom, the LTC has built a set of recommendations, outlined below. These recommendations serve as a guideline for open and transparent communication about AI and are not exhaustive; your course policies should suit your teaching!

- Plainly stating how AI may be used and expressly state this position in your syllabus.

- AI statements are part of the required UW-Whitewater Syllabus Language

- Check out the AI Syllabus Language KB for examples

- Provide context for your decision; providing an explanation as to why you do or do not feel AI is appropriate for your course will help students to understand why using it or not using it is a part of the learning process.

- Explain how you grade and what is considered cheating or misuse in your course

- Ensuring students know how to provide documentary evidence of their work; having copies of outlines, rough drafts, or methodology statements.

Instructors have detection tools at their disposal to identify students that may have inappropriately used AI to generate text for an assignment or failed to cite AI as part of their creative process. While detection tools may seem like an easy solution to the improper use of AI, detection tools come with their own misuse concerns.

- Detection software is more likely to flag authentic student content as AI generated when the student may be a non-native English speaker or have a disability.

- Grammar and spell check programs are AI; hence, they can trigger a detector even if the ideas and content were genuinely created.

- As AI technology continues to evolve, detection software will remain behind the improvement cycles of generative AI. It has already become more difficult to detect AI generated text through use of “humanizing” applications; software that is able to make writing appear less generic and formulaic, typical indicators of AI.

UW-Whitewater instructors have access to AI detection through Turnitin, which can be integrated with Canvas. A few tips to use the Turnitin detector successfully:

- Only long format prose can be submitted for detection. Bullet points, incomplete sentences, or short paragraph answers cannot be evaluated.

- Detection is only available in English.

- The AI indicator is located inside the Similarity Report in Turnitin. Students are not able to see the AI indicator, even if you allow students to view their Similarity Score immediately after submission.

- If the document was evaluated successfully, the AI indicator box will be blue. If it is red, the submission was not able to be evaluated. If it is grey, there was an error with the document, such as incorrect file type, size, or over 15,000 words.

- The AI indicator displays a score between 0 and 100. The displayed percentage indicates the amount of qualifying text in the submission that was determined to be generated by AI. Not all text within a submission can be considered; i.e., a bulleted list. Hence, a score of 100% does not mean the document was 100% AI generated, but rather, 100% of the highlighted text was likely to be AI generated.

- There is no target score; always use the indicator percentage as a starting point for a discussion with the student.

- Turnitin is capable of false positives. In company provided documentation, the error rate has been reported as 1%. In practice, the error rate has been reported as high as 4%.

Given the limitations of Turnitin, instructors may opt to run student submissions through a third-party detection service. This requires several careful considerations:

- As per UW-System legal, students must be informed of their instructor’s intent to use a third-party detector and given the chance to provide explicit consent. Doing otherwise may consitute a FERPA violation.

- The LTC and ITS cannot provide support for individual or department purchased detection tools.

In short, AI detection remains a nuanced conversation that requires open communication between instructors and students with expectations clearly defined. The LTC welcomes questions, ideas, and concerns about AI; we are here to help!

Looking for more? Join the LTC for a series of workshops on AI! Or browse our summaries from past events.